Most businesses treat AI hallucinations like a weather event, something unpredictable that just happens to them. They ask ChatGPT about their company, get a nonsensical answer or a competitor description, and write it off as a “glitch.” They’re wrong.

In our 90-day experiment, we learned that hallucinations are rarely random errors. They are retrieval failures. When an AI misrepresents your brand, it’s because your digital footprint is too thin, too slow, or too inconsistent for the machine to verify.

The Safest Brand Wins in AI Search

AI models are risk-averse. Because their reputation is on the line with every answer, they don’t search for the “best” brand; they search for the one that is safest to explain.

If your data is buried in gated PDFs or written in clever marketing riddles that flatten into literal nonsense for a crawler, the AI will hesitate. When that happens, the engine issues a “fan-out query”—it grabs whatever info it can find from third-party sources to assemble an answer.

For us, this meant that in the early weeks of our experiment, ChatGPT confidently described No Fluff as a “job board” because “No Fluff Jobs” was easier for the machine to retrieve and verify than our brand-new site.

The 5–15 Second Crawl Window

AI acts like an impatient analyst. Its priority is speed-to-answer, not accuracy.

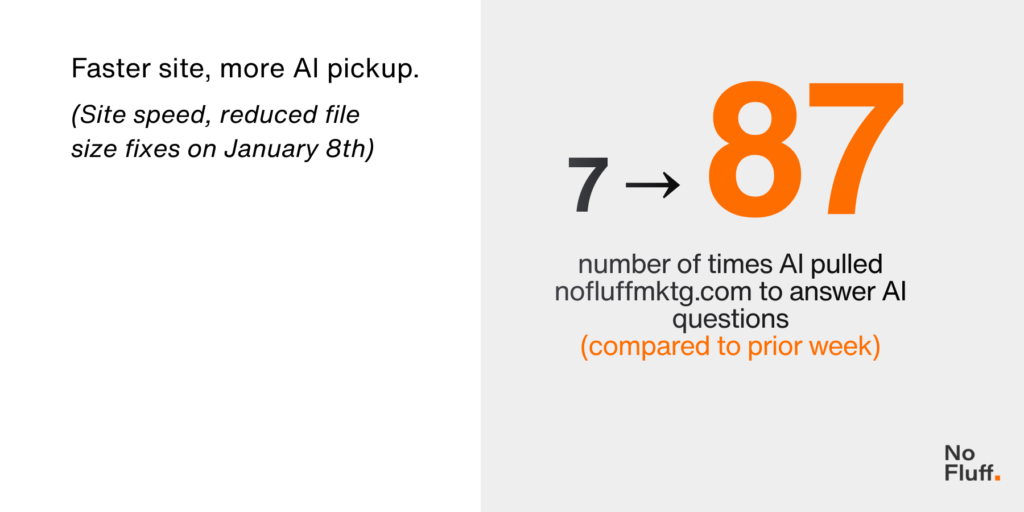

Our findings were clear: if your site takes more than 5–15 seconds to fetch and parse, the AI bot will move on. It won’t wait for your slow images to load. It will simply pull a “hallucination” from a faster, more accessible source. Once we optimized our site speed, the number of AI answers referencing our domain directly jumped from 7 to 87 almost overnight.

Why AI Models Hallucinate About Your Brand: Three Signal Failures

In our experiment, nearly every hallucination traced back to one of three measurable signal failures:

- Technical Friction

Your site is too slow or uses complex layouts that fragment content. - Entity Confusion

Your brand name is non-distinct (like “No Fluff”), and/or you haven’t used true structured data (JSON-LD Schema) to tell the machine exactly who you are. - Unfinished Trust

You have no third-party validation. AI cross-checks your site’s claims against the web. For B2B brands, when LinkedIn, directories, and credible publications do not back up your story, AI fills in the blanks on its own.

How to Reduce AI Hallucinations

You don’t “fix” a hallucination by arguing with the bot; you fix the public record.

Step 1: Fix Site Speed and Crawl Stability

If your pages take too long to load or rely on heavy scripts, AI systems may pull from secondary sources instead. Keep load times fast, eliminate crawl errors, and ensure important content is not hidden in PDFs or gated assets.

Step 2: Strengthen Schema Markup

Implement clean Organization and Service JSON-LD that matches your visible content exactly. Incomplete or conflicting structured data increases misattribution and replacement by competitors.|

Step 3: Reinforce Signals Beyond Your Website:

AI does not rely on your domain alone. Align your entity information across LinkedIn, Crunchbase, G2, and authoritative publications. External validation reduces hallucinations and improves citation accuracy.

Step 4: Build Answer-First Content

Stop “wandering” in your writing. If your pages don’t start with a direct, factual answer, you are forcing the AI to guess.

Step 5: Chunk Content for Extraction

Break pages into clear, independent sections with descriptive subheads. AI systems extract blocks, not narratives. If a section cannot stand alone, it is harder to cite.

Step 6: Deploy an llms.txt File

Place an llms.txt file at your domain root to provide a plain-text roadmap of your brand, preferred descriptions, and priority URLs. This reduces ambiguity and supports correct attribution.

Step 7: Refresh Critical Pages Regularly

Outdated content increases uncertainty. Updating key pages every 60 to 90 days reinforces relevance and improves trust weighting.

The Strategic Takeaway: Become the Safest Source to Cite

An AI hallucination is a stress test for your marketing foundation. If the machine is confused, your buyers probably are, too. Stop blaming the model and start engineering your signals so you become the safest source for the AI to cite.

Frequently Asked Questions

1. Why do AI engines hallucinate about companies?

AI hallucinations about companies are usually retrieval failures, not random glitches. When a model cannot quickly verify clear, consistent information about your brand, it assembles an answer from whatever sources it can confidently access. If your digital footprint is thin, fragmented, or inconsistent, the AI fills the gaps.

2. How do AI models decide which company to describe or recommend?

AI models are risk-averse. They prioritize what is safest to explain, not what is most innovative or most accurate. If another entity has a clearer structure, stronger third-party validation, or faster-loading pages, the model may default to that source because it is easier to retrieve and verify.

3. What technical issues most commonly cause brand misrepresentation?

Three signal failures typically drive hallucinations:

- Technical friction: Slow load times or complex layouts that prevent fast parsing.

- Entity confusion: A non-distinct brand name without structured data clarifying identity.

- Unfinished trust: Weak or missing third-party validation, making cross-verification difficult.

When these signals are weak, the model substitutes assumptions.

4. How can a company reduce AI hallucinations about its brand?

You reduce hallucinations by strengthening the public record. That means improving site speed, implementing clear structured data, standardizing brand descriptions across platforms, and publishing answer-first content that begins with direct, factual statements. When your signals are consistent and easy to retrieve, AI systems are more likely to cite you accurately.