Key Insights:

- New B2B brands can quickly gain AI visibility, but only when positioning and signals are clear from the start.

- Early visibility is limited more by brand confusion and vague messaging than by competitive pressure.

- The same work that improves AI visibility, clear structure, consistent language, and proof also boosts traditional search and more.

- Early traction comes from owning specific buyer questions, not from chasing broad or unfocused exposure.

Context

No Fluff is a brand‑new B2B services partner in one of the noisiest spaces in marketing: visibility in AI-driven, answer-based search experiences. Agencies are hearing the same question from clients,

“Are we showing up in AI answers, not just in Google?”

B2B buyers are already there. Forrester research shows that 89% of B2B buyers now use generative AI to research vendors or solve business problems. Marketing teams are starting to catch up, with roughly 37% are focusing on visibility in these answer-driven environments (Yahoo Finance).

To test whether a new brand can earn AI visibility (while being correctly identified and trusted) we served as the experiment. Like most startups, we began at true zero: day-one revenue, a minimal social footprint, a non-unique brand name, and a category dominated by established competitors.

Rather than assuming our frameworks would work, we documented in real time what it takes for a new B2B company to earn consideration in 90 days.

Problem

For many founders, the uncomfortable question is simple: Can a brand-new B2B company actually win in answer-driven discovery, or do established players with bigger budgets and longer histories always have the advantage?

For No Fluff, this was not hypothetical. It was a real launch constraint with several compounding factors:

- No historical footprint: AI systems rely strongly on past signals and accumulated references (training data).

- No authority shortcuts: No backlinks, press, investor halo, or third-party validation to lean on

- A non-distinct brand name: “No Fluff” is a common phrase, which led to early confusion with generic language or similarly named companies.

- High risk of misclassification: Without clear signals, responses could ignore us or describe us incorrectly.

- Little margin for vagueness: Broad explanations would not create enough distinction to justify us in AI answers

None of this is unusual. It’s the starting line for most new brands. New sites typically wait months before they earn real visibility, and plenty of startups launch with names that are easy to confuse or overlook.

So the real question wasn’t theoretical. It was practical: could the right No Fluff be clearly recognized, understood, and chosen within a 90-day window? That question is what kicked off the Zero-to-Visible experiment.

Solution

Our approach was developed through direct application, not theory. By reverse-engineering buyer questions and testing how these systems read, explain, and validate a new brand, we established a repeatable visibility model.

Before touching the website or publishing content, we did the foundational work many brands skip or later have to undo. We analyzed the competitive landscape, clarified the problems we solve, and mapped how buyers are likely to ask about them. Because real user questions are not publicly available, we inferred them through keyword research, competitor analysis, and structured prompt exploration.

This resulted in a fixed universe of 150 buyer questions we wanted to show up for. That question map became the blueprint for everything that followed: positioning, content, structure, and measurement.

Our execution focused on three connected layers over the 90-day sprint:

- Understanding – Can we be read and categorized correctly?

- Explanation – Can we clearly explain topics that matter to buyers?

- Validation – Can external sources reinforce and repeat those explanations?

Implementation

We treated this as a controlled sprint, breaking the work into clear phases so we could see which specific actions moved visibility.

Pre-Launch: Market Fit, Prompt Map, and Site Build

- Mapped the competitive landscape: Identified which brands were already being referenced, which buyer questions were “winnable,” and where category language conflicted.

- Defined the business clearly: What No Fluff is, who it serves, and the outcomes it delivers, then translated that into positioning before web copy.

- Reverse-engineered buyer questions: Deep research into how B2B leaders actually frame questions in the space based on keywords and competitor analysis.

- Locked a fixed benchmark set: Established 150 buyer questions we wanted to be present for, spanning branded, category, problem, comparison, and advanced decision scenarios.

- Designed for clarity over aesthetics: Built a simple site structure with minimal templates and pages

- Instrumented measurement early: Logged exact wording, timestamps, platforms, full responses, references, competitors, and position for every test across the full 90-days

Weeks 1-2: Go-Live, Technical Set-Up, & Baseline Measurement

- Launched consistently across channels: Coordinated our site launch on LinkedIn, Medium, YouTube, and core profiles, all reinforcing the same core definitions.

- Aligned entity signals: Standardized founder and brand bios across platforms so descriptions and relationships matched everywhere.

- Centralized technical signals: Unified schema, metadata, and other machine-facing settings, and aligned them with on-page content to avoid conflicts.

- Triggered initial indexing: Submitted the site across major search platforms to initiate the crawling and indexing

- Established weekly testing: Ran the fixed 150-question benchmark weekly in ChatGPT, Perplexity, Gemini, and Google AI Overviews to track movement over time.

- Removed crawl friction: Audited robots.txt, sitemaps, and hosting, then improved site speed by optimizing images, fonts, and non-critical JavaScript.

Weeks 3-4: Channel Focus & Retrieval-Ready Content

- Focused efforts on channels AI frequently pulled from: Narrowed effort to channels consistently returned in benchmark results.

- Built decision-ready pages: Created content for the top 8–10 priority questions using buyer language, clear headings, and FAQ structures.

- Modeled proven structures: Analyzed 300+ high-performing AI-visible articles to identify length and formatting patterns and applied them to our own content.

- Produced structured video: Published YouTube content withquestion framing, transcripts, and metadata.

- Continued testing: Maintained weekly testing against the same 150-question benchmark

Weeks 4–6: Entity Clarification & Signal Expansion

- Secured authoritative listings: Claimed core directory profiles to reduce brand confusion and strengthen external references.

- Expanded explanation-first content: Published deeper material on AI-visibility and what it takes for a new company to show up clearly.

- Published methodology openly: Turned internal processes into how-to specifics that could be referenced externally.

- Participated selectively in Reddit: Engaged in relevant discussions to reinforce our presence (without self-promotion!)

- Validated tools against reality: Cross-checked various visibility tools to identify hallucinations and best for producing deeper metrics

- Started targeted outreach: Reached out to partners and media where our work actually adds value, treating coverage as proof, not a badge collection

Results (First 45 Days)

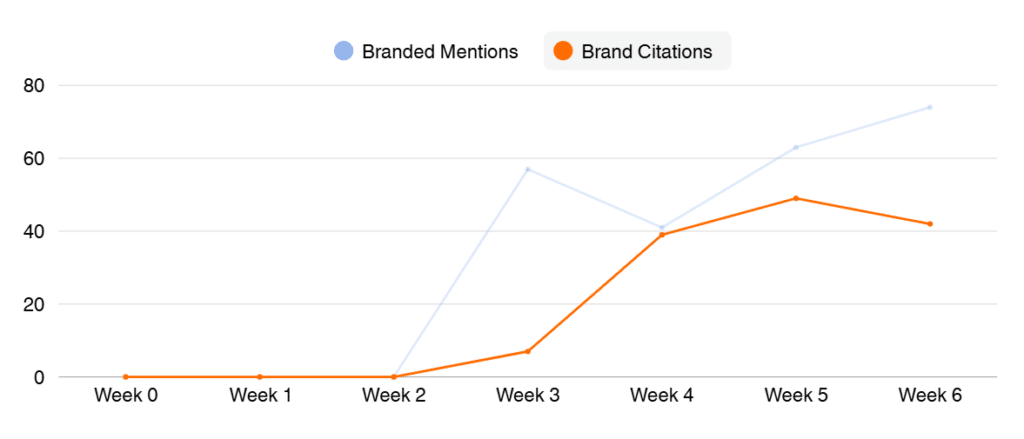

By day 45, we went from zero to consistent AI visibility across AI answers, search results, and founder-led distribution. That timeline is faster than what most new brands experience. Within AI systems, No Fluff cleared emerging-brand share-of-voice thresholds, achieved consistent prompt-set coverage, and shifted from misattributed mentions to majority-correct citations.

After fixing early name confusion, we could see the real picture: AI systems were no longer mixing us up with other “No Fluff” brands and were increasingly mentioning and citing us directly, including our own site. Search and social followed the same path. We compressed what’s usually a months-long ramp into about six weeks, leading to strong early Google impressions and rankings, daily visibility that looked more like an established site, and LinkedIn founder reach that scaled well beyond typical early-stage B2B norms.

The takeaway was simple: when a brand is easier to understand and trust, AI visibility and broader discovery improve together.

| Metric | 45 Day Result | New‑Brand Benchmarks |

| Brand Mention Rate in AI answers | 16.5% mention rate | Emerging brands: 5–10% AI SOV Established leaders: 20–30%+ across a focused prompt set (Source: getpassionfruit) |

| Credible Presence (non‑hallucinated citations) in AI answers | 61.6% of AI mentions correctly reference No Fluff (early weeks mapped to other “No Fluff” brands) | 86% of AI citations go to brand‑managed sources when structured signals are strong. (Source: investors.yext) |

| Ownership Rate (AI cites nofluff.ai) in AI answers | ~11% of relevant AI answers cite No Fluff’s own site as source | 11% of domains receive citations across major AI systems at all, skewing to brand‑managed properties. (Source: investors.yext) |

| Overall AI visibility & citations | Visible in 39 of 150 prompts (~26% coverage), 74 mentions, 42 with citations | Established brands see 20–40% prompt‑set visibility (Source: getpassionfruit) |

| Google Search Impressions | ~5,000 total impressions in first 45 days | Brand‑new sites first‑month impressions often in the hundreds to low thousands (Source: advertisingbusiness) |

| Average Position in Google | Average position ~2.6 in Google search | New domains rarely hold stable top‑10 rankings in the first 1–3 months. (Source: webfx) |

| Daily Google Impressions Run‑Rate | Averaging 200+ impressions per day | First 30 days: single-digit to low double-digit daily impressions are typical for new sites. (Source: advertisingbusiness) |

| Founder‑Led LinkedIn Impressions | Grew from <2,000 to ~14,000 impressions per post | Average post lands around 500–3,000 impressions, while high‑performing posts reach 5,000–20,000 impressions (Source: LinkedIn) |

| Multi‑Platform Content | Retrieval‑friendly LinkedIn + Medium content correlates with more AI citations from those URLs | AI citation likelihood increases 2–3× for brands active across multiple platforms. (Source: thedigitalbloom) |

What’s Next

The hardest part for any new business isn’t building something good. It’s getting picked when no one knows your name yet. Most real buyer questions happen early, before someone is searching for a specific company. They’re asking things like “what’s best?” or “who should I trust?” And in those moments, the same names keep showing up because they’ve been around longer and have more proof piled up around them.

Our focus now is closing that gap:

- Measuring ownership, not just presence

Whether we’re included when buyers don’t name us, how often we appear relative to competitors, and whether our content gets reused as the explanation. - Earning validation beyond our own site

Comparison content, third-party contributions, and placements where buyers already look for guidance, built steadily over time. - Turning the sprint into a system

Turning what works and what breaks into a repeatable approach that other B2B teams can apply without guessing.

For B2B teams in crowded markets, this sprint is proof in public. Established players do have advantages. The open question is whether a new brand can earn AI visibility with the right focus, clarity, and signals. This work shows that it can.