Welcome to Week 5 of the Zero-to-Visible experiment. If you are new here, this is a live experiment testing what it actually takes for a new brand to show up inside AI answers (ChatGPT, Perplexity, Gemini, and more) in 90 days.

At this stage, the question is no longer whether small gains in AI visibility are possible, but what makes them sustainable. This week focused on turning early signals into repeatable processes.

First: A Note on Metrics

We’re being deliberate with visibility numbers right now.

This sprint has surfaced real limitations in how AI visibility is measured, especially in terms of timing consistency, retrieval reliability, and hallucinations. Rather than relying on what any single “AI visibility analytics platform” reports, we’re validating results through automated, recurring prompt testing and exporting raw outputs for our own analysis.

Different tools run prompts at different times, limit how many prompts you can test, restrict access to raw outputs, and handle brand matching inconsistently. Those differences directly impact a valid, controlled visibility test. Before we report any overarching AI lift, we’re making sure our baseline is reliable.

That said, we did see one clear win. After site speed optimizations, the number of AI answers that directly referenced our domain (website) increased from 7 to 87!

Here is all else we focused on this week:

Choosing Fewer Channels on Purpose

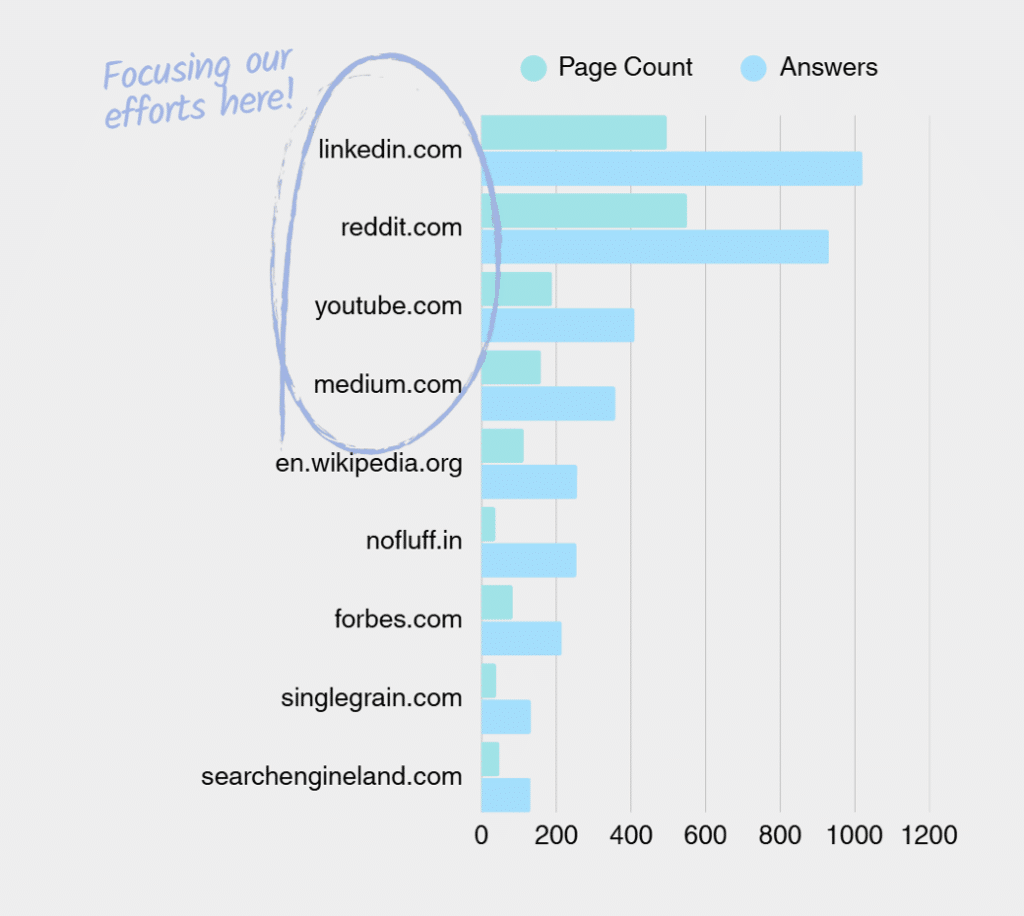

We narrowed our core digital channels to LinkedIn, Medium, YouTube (long- and short-form), and our website. (Reddit coming later) Our analysis showed these are the places where AI most consistently retrieves information across our ~150 prompt set. Covering more channels does not mean more visibility if AI does not reliably pull from them.

Retrieval Ready Content

As mentioned last week, rather than spreading effort across ~150 prompts, we focused execution on 10 prompts that show consistent retrieval behavior and have lower competition.

We first identified the content we needed to build or update to directly answer our prompt questions. (We detail why content freshness matters in a separate post.) From there, we analyzed 300+ blog and Medium posts already cited in our prompt set to assess their structural consistency. Here’s some solid findings you can use:

- Open each section or blog with a direct answer to the main question

- Use literal headers that mirror real questions

- Structure sections to stand alone; so AI could lift text without requiring earlier context or references

- Combined full sentences (context) with bullet points (facts)

- Added explicit definitions, FAQs, and key takeaway sections

FAQ Refinements

Knowing the core prompts that we need to focus on, we updated our site’s FAQ page (both text and schema). This work closely followed the same principles laid out in The Practical Guide to AI-Visible FAQ Pages. We treated each prompt like a retrieval problem and designed content so AI could extract clean, bounded answers.

Directory Placements

We strengthened core entity signals through Google, Bing, and Yelp listings. These sources serve as strong mitigants of ambiguity and hallucination, especially when AI stitches together answers from multiple domains. Strong entity grounding increases the likelihood of AI pickup.

Deeper Understanding of AI Hallucinations

One of the biggest takeaways this week: AI doesn’t answer from one source; it fans out across many.

When a question is asked, the model doesn’t go find “the best page.” It issues multiple retrieval passes across different sources, formats, and domains, then tries to assemble a coherent answer. AI models expand a single question into many sub-queries, pull from whatever they can fetch quickly and confidently, and then synthesize. Therefore, hallucinations aren’t random; they’re often retrieval failures across fan-out queries.

Instead of asking, “How do we get AI to say the right thing?”

We are now asking, “How do we make it easy for AI to retrieve our content first?”

That’s this week!

Stat of the Week

ChatGPT accounts for roughly 64% of worldwide AI chatbot website visits (down from ~86% a year ago), while Gemini has grown to about 21% of traffic (up from only ~5% last year) (SearchEngineLand)

Google is clearly gaining ground quickly as it integrates Gemini into its products and search experiences.

Fresh Reads

Each newsletter, we’ll share our favorite articles and breaking news.

- Publishers fear AI search summaries and chatbots mean ‘end of traffic era (The Guardian)

- Google teams up with Walmart and other retailers to enable shopping within Gemini AI chatbot (AP News)

- Wikimedia Foundation announces new AI partnerships with Amazon, Meta, Microsoft, Perplexity, and others (Tech Crunch)

As always, reach out anytime at katie@nofluffmktg.com if you’ve got questions or if you’re building your own visibility story too

Frequently Asked Questions About Zero-to-Visible and No Fluff

What is the Zero-to-Visible experiment?

Zero-to-Visible is an ongoing experiment that documents how a new brand becomes discoverable, explainable, and citable by AI systems. It tracks which signals influence whether AI systems recognize and recommend a company during early buyer discovery.

What does “AI visibility” mean in Zero-to-Visible?

In Zero-to-Visible, AI visibility means whether AI systems can recognize a brand, accurately explain what it does, and include it in AI-generated answers when buyers ask category-level questions.

Who is running the Zero-to-Visible experiment?

The Zero-to-Visible experiment is run by No Fluff, a B2B growth firm focused on how AI systems recognize, explain, and recommend brands in AI-generated answers.

Why is No Fluff running this experiment publicly?

No Fluff runs Zero-to-Visible publicly to document real-world signals that influence AI visibility. The goal is to replace speculation about AI search with observable patterns and repeatable methods.

Are the results specific to one AI platform?

No. Zero-to-Visible observes patterns across multiple AI systems, including retrieval-based and generative models. While individual outputs vary, consistent signals tend to produce similar visibility outcomes across platforms.

Can other B2B brands replicate these results?

Yes. When the same structural signals—clear brand definitions, consistent category positioning, structured content, and third-party validation—are applied consistently, similar AI visibility patterns can be reproduced.

Does Zero-to-Visible replace SEO?

No. Zero-to-Visible shows how AI visibility builds on SEO foundations. SEO enables access to content, while Zero-to-Visible focuses on whether AI systems trust, explain, and recommend a brand once that access exists.